Two papers have been accepted to the 2019 IEEE Conference on Decision and Control (CDC):

Stochastic subgradient methods for dynamic programming in continuous state and action spaces

Sunho Jang, and Insoon Yang

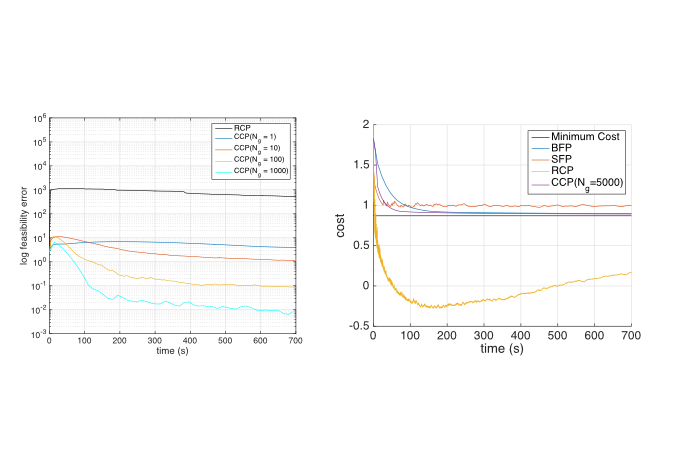

Abstract: In this paper, we propose a numerical method for dynamic programming in continuous state and action spaces. Our method only discretizes the state space and consists of two stages. In the first stage, we approximate the output of the Bellman operator at a particular state as a convex program. By employing an auxiliary variable to assign the contribution of each grid point or node to the next state, the proposed method does not require any explicit interpolation in computing the optimal value function or an optimal policy. We also show the uniform convergence properties of this approach. In the second stage, we focus on solving the convex program with many constraints. To efficiently perform the stochastic subgradient descent while avoiding the full projection onto the high-dimensional feasible set, we develop a novel algorithm that samples, in a coordinated fashion, a mini-batch for a subgradient and another for projection. We show several salient properties of this algorithm, including convergence, and a reduction in the feasibility error and in the variance of the stochastic subgradient.

On improving the robustness of reinforcement learning-based controllers using disturbance observer

Jeong Woo Kim, Hyungbo Shim, and Insoon Yang

Abstract: Contrary to online reinforcement learning (RL), the performance of a controller designed via offline RL through a simulation model is easily deteriorated when applied to the real physical plant due to real-time external disturbances and plant uncertainties. In this paper, an idea to enhance the robustness of the RL-based controller against these uncertainties is proposed by utilizing the disturbance observer (DOB). Comparing to online RL which asymptotically improves the performance of robustness or optimality, the proposed idea can guarantee the performance immediately when the control is applied to the real plant, and be combined with any RL algorithm. We present the conditions for uncertain plants to achieve sub-optimality of control performance after discussing the role of the DOB and its implementation. Finally, an inverted pendulum is chosen as a target system for simulation and the performance of the proposed idea is verified through the simulation results.