The paper “Accelerated gradient methods for geodesically convex optimization: Tractable algorithms and convergence analysis” has been accepted to the International Conference on Machine Learning (ICML).

Accelerated gradient methods for geodesically convex optimization: Tractable algorithms and convergence analysis

by Jungbin Kim, and Insoon Yang

Abstract:

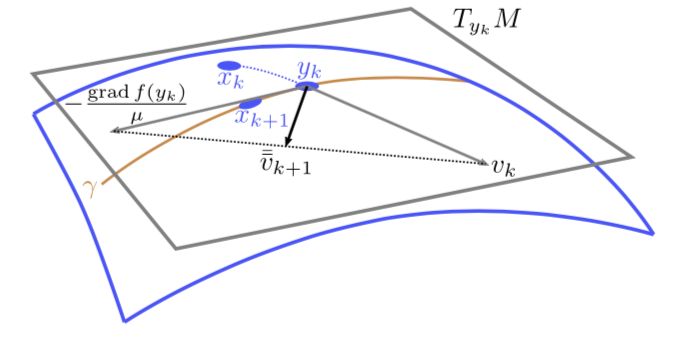

We propose computationally tractable accelerated first-order methods for Riemannian optimization, extending the Nesterov accelerated gradient (NAG) method. For both geodesically convex and geodesically strongly convex objective functions, our algorithms are shown to have the same iteration complexities as those for the NAG method on Euclidean spaces, under only standard assumptions. To our knowledge, the proposed scheme is the first fully accelerated method for geodesically convex optimization problems. Our convergence analysis makes use of novel metric distortion lemmas as well as carefully designed potential functions. A connection with the continuous-time dynamics for modeling Riemannian acceleration in (Alimisis et al., 2020) is also identified by letting the stepsize tend to zero. We validate our theoretical results through numerical experiments.

![[ICML] Accelerated gradient methods for geodesically convex optimization](http://coregroup.snu.ac.kr/wp-content/uploads/2018/11/Depositphotos_4892867_xl-2015.jpg)