The paper “Unifying Nesterov’s accelerated gradient methods for convex and strongly convex objective functions” has been accepted for Oral presentation at the 40th International Conference on Machine Learning (ICML).

Unifying Nesterov’s accelerated gradient methods for convex and strongly convex objective functions

by Jungbin Kim, and Insoon Yang

Abstract:

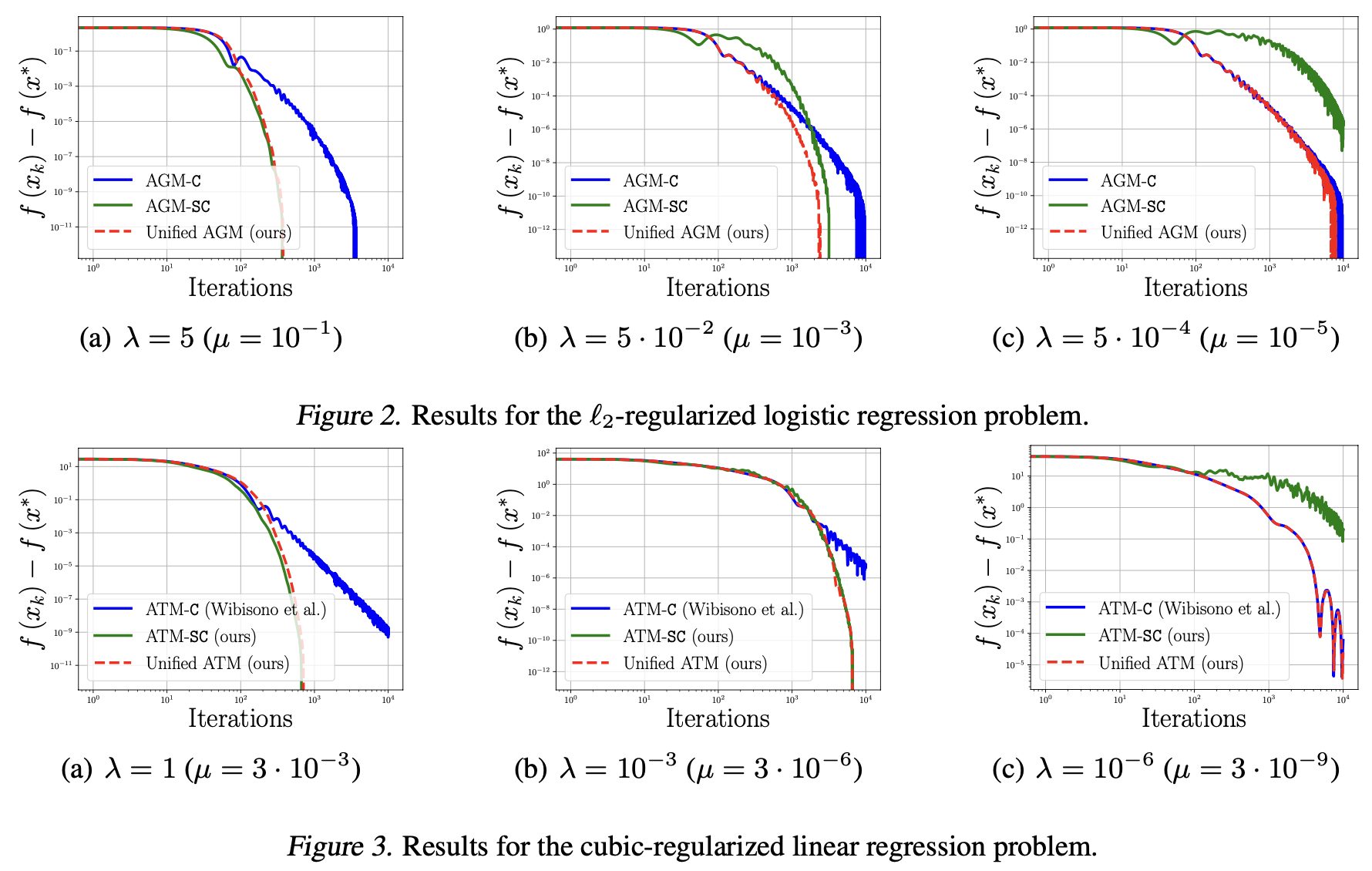

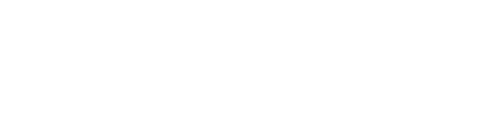

Although Nesterov’s accelerated gradient method (AGM) has been studied from various perspectives, it remains unclear why the most popular forms of AGMs must handle convex and strongly convex objective functions separately. To address this inconsistency, we propose a novel unified framework for Lagrangians, ordinary differential equation (ODE) models, and algorithms. As a special case, our new simple momentum algorithm, which we call the unified AGM, seamlessly bridges the gap between the two most popular forms of Nesterov’s AGM and has a superior convergence guarantee compared to existing algorithms for non-strongly convex objective functions. This property is beneficial in practice when considering ill-conditioned $\mu$-strongly convex objective functions (with small $\mu$). Furthermore, we generalize this algorithm and the corresponding ODE model to the higher-order non-Euclidean setting. Last but not least, our unified framework is used to construct the unified AGM-G ODE, a novel ODE model for minimizing the gradient norm of strongly convex functions.

![[ICML (Oral)] Unifying Nesterov’s AGM for convex and strongly convex objective functions](http://coregroup.snu.ac.kr/wp-content/uploads/2018/11/Depositphotos_4892867_xl-2015.jpg)